Orange Workflows

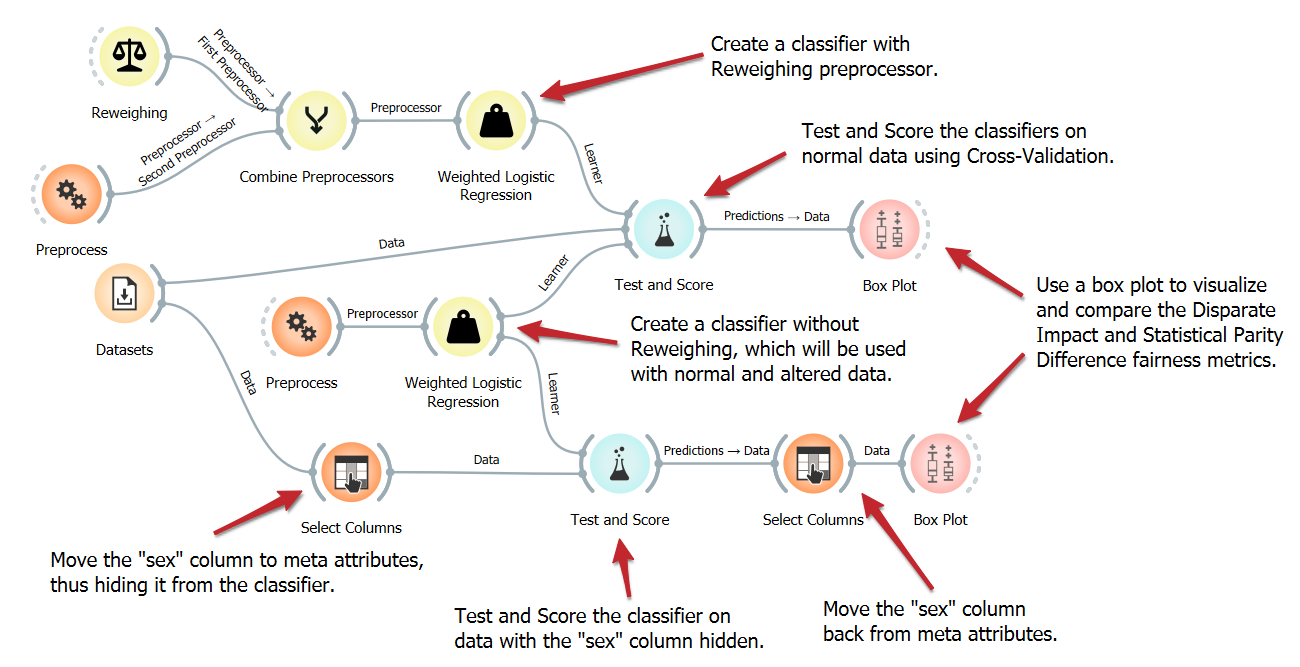

Dataset Bias Examination

Understanding the potential biases within datasets is crucial for fair machine-learning outcomes. This workflow detects dataset bias using a straightforward algorithm. After loading the dataset, we add specific fairness attributes to it, which are essential for our calculations. We then compute the fairness metrics via the Dataset Bias widget and explain the results in a Box Plot.

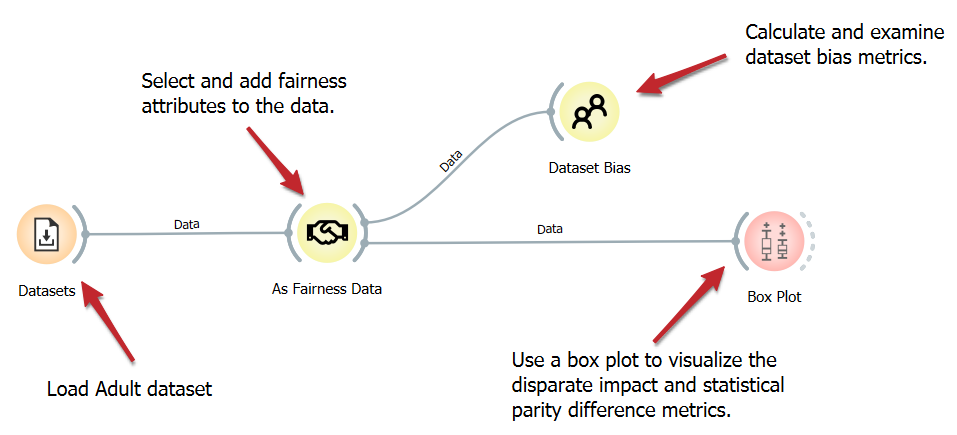

Reweighing a Dataset

Detecting bias is only the first step in ensuring fair machine learning. The next step is to mitigate the bias. This workflow illustrates removing bias at the dataset level using the Reweighing widget. Initially, split the data into training and validation subsets. We then check for bias in the validation set before reweighing. Using the training set, we train the reweighing algorithm and apply it to the validation set. Finally, we check for bias in the reweighed validation set. We can also visualize the effect of the reweighing using a Box Plot.

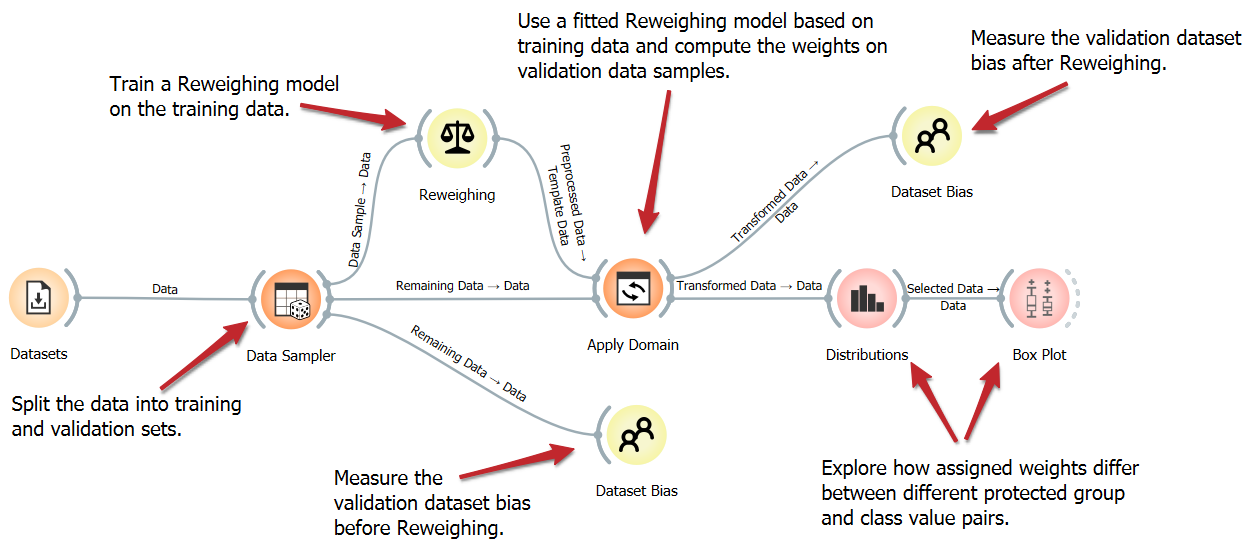

Reweighing as a preprocessor

We can use the reweighing fairness algorithm for more than just adding weights to a dataset. It can also be used as a preprocessor for a specific model. This workflow illustrates how to use the Reweighing widget as a preprocessor for the Logistic Regression model. Initially, we load the dataset and input it into the Test and Score widget, which we will use to evaluate our model. Now, we need to connect a Logistic Regression model, which has the Reweighing widget as a preprocessor, to the Test and Score widget. Doing so ensures our data gets reweighed before the model learns from it. We compare these results against those derived from a Logistic Regression model without reweighing and visualize both using a Box Plot.

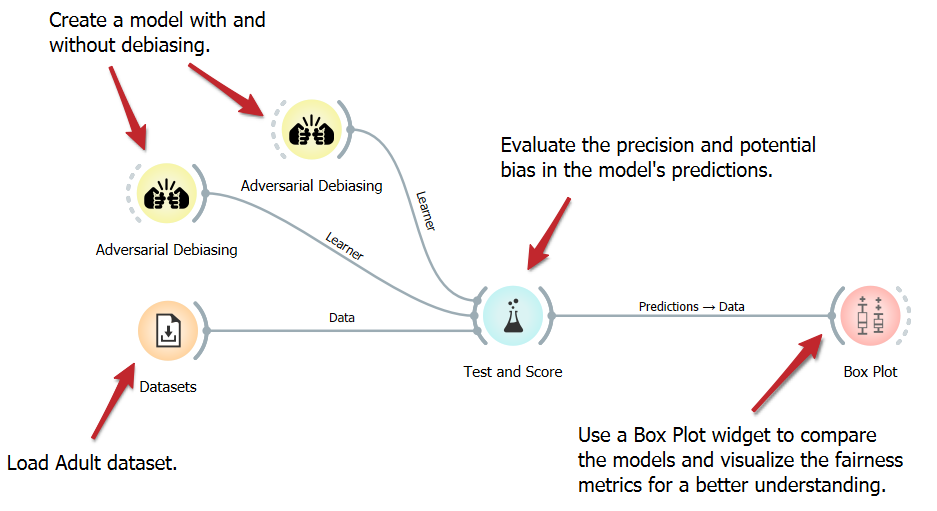

Adversarial Debiasing

The easiest method to address bias in machine learning is to use a bias-aware model. This approach eliminates the need for fairness preprocessing or postprocessing. In this workflow, we will employ a bias-aware model named Adversarial Debasing for classification. We will train two versions of this model: one with and one without debiasing. Finally, we will compare and display the fairness metrics using a box plot widget.

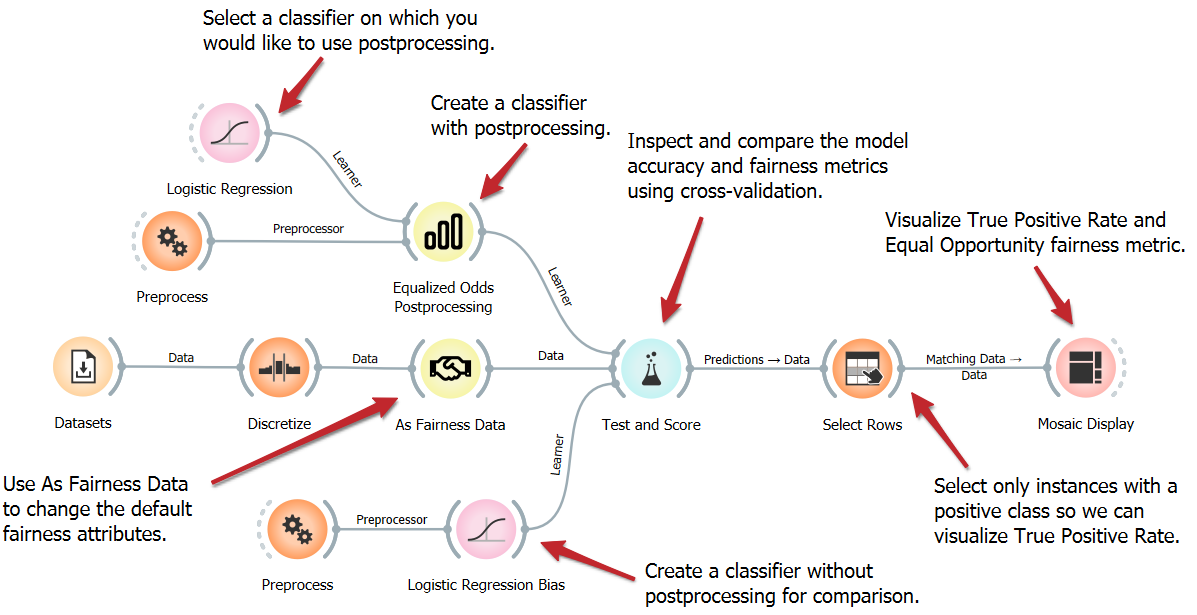

Equal Odds Postprocessing

Another way to mitigate bias is to use a postprocessing algorithm on the model’s predictions. This workflow illustrates using the Equal Odds widget as a post-processor for the Logistic Regression model. To use the post-processor, we need to connect any model to the Equalized Odds Postprocessing widget along with any needed pre-processors. Doing so ensures our model’s predictions get post-processed before we evaluate them.

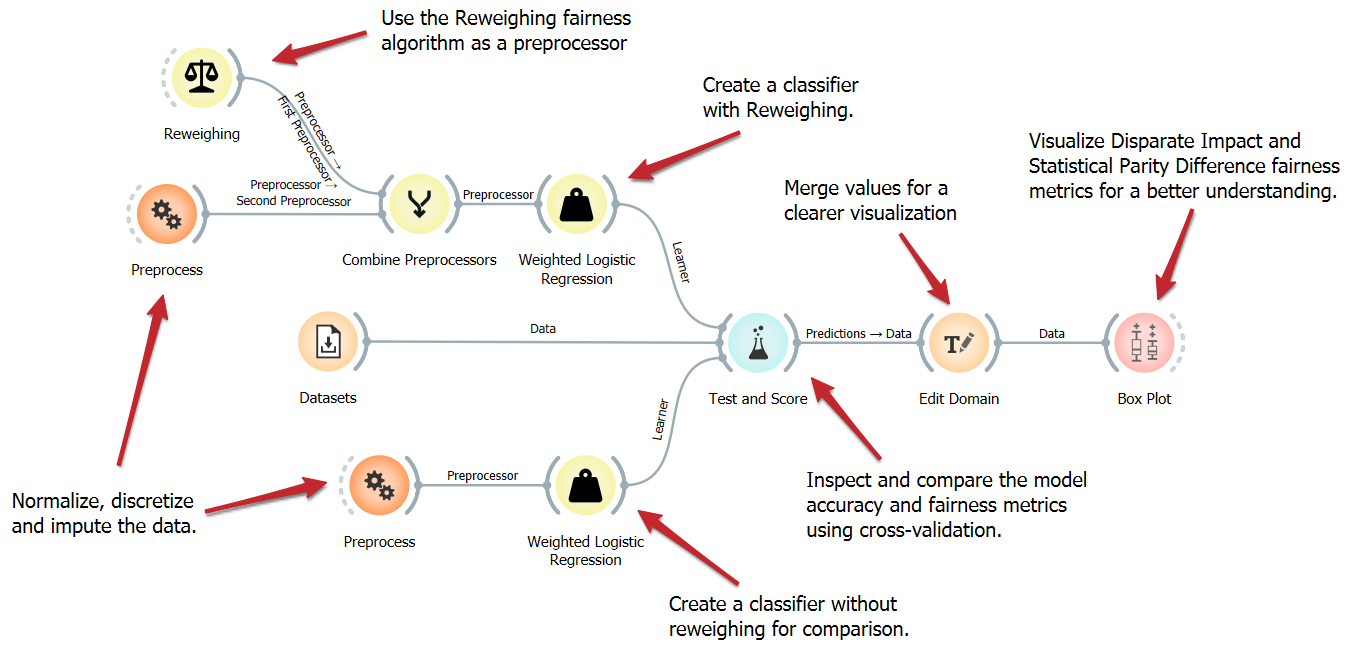

Hiding Protected Attribute

Why would we use fairness algorithms instead of simply removing the protected attribute to avoid bias? This workflow illustrates why that is not such a good idea. We compare the predictions of a model using Reweighing to those of a model on data with the protected attribute removed. We use Box Plot to visualize and compare common fairness metrics.