By: Žan Mervič, Sep 19, 2023

Why Removing Features Isn't Enough

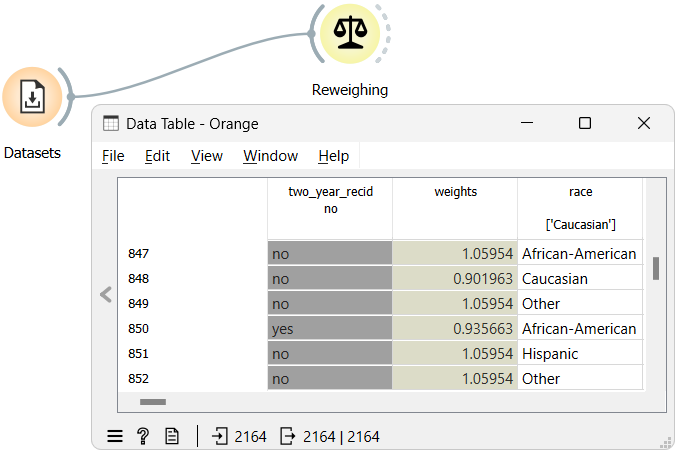

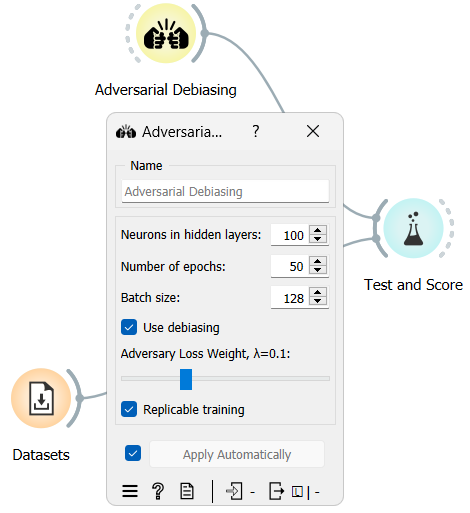

In this blog, we confront the common misconception that merely removing a protected attribute from a dataset eliminates bias in model predictions. Our case study reveals that models trained without these attributes still produce biased results. This is due to feature correlations that indirectly capture the protected information. Our conclusion? You cannot sidestep the need for specialized fairness algorithms.